Azure integration

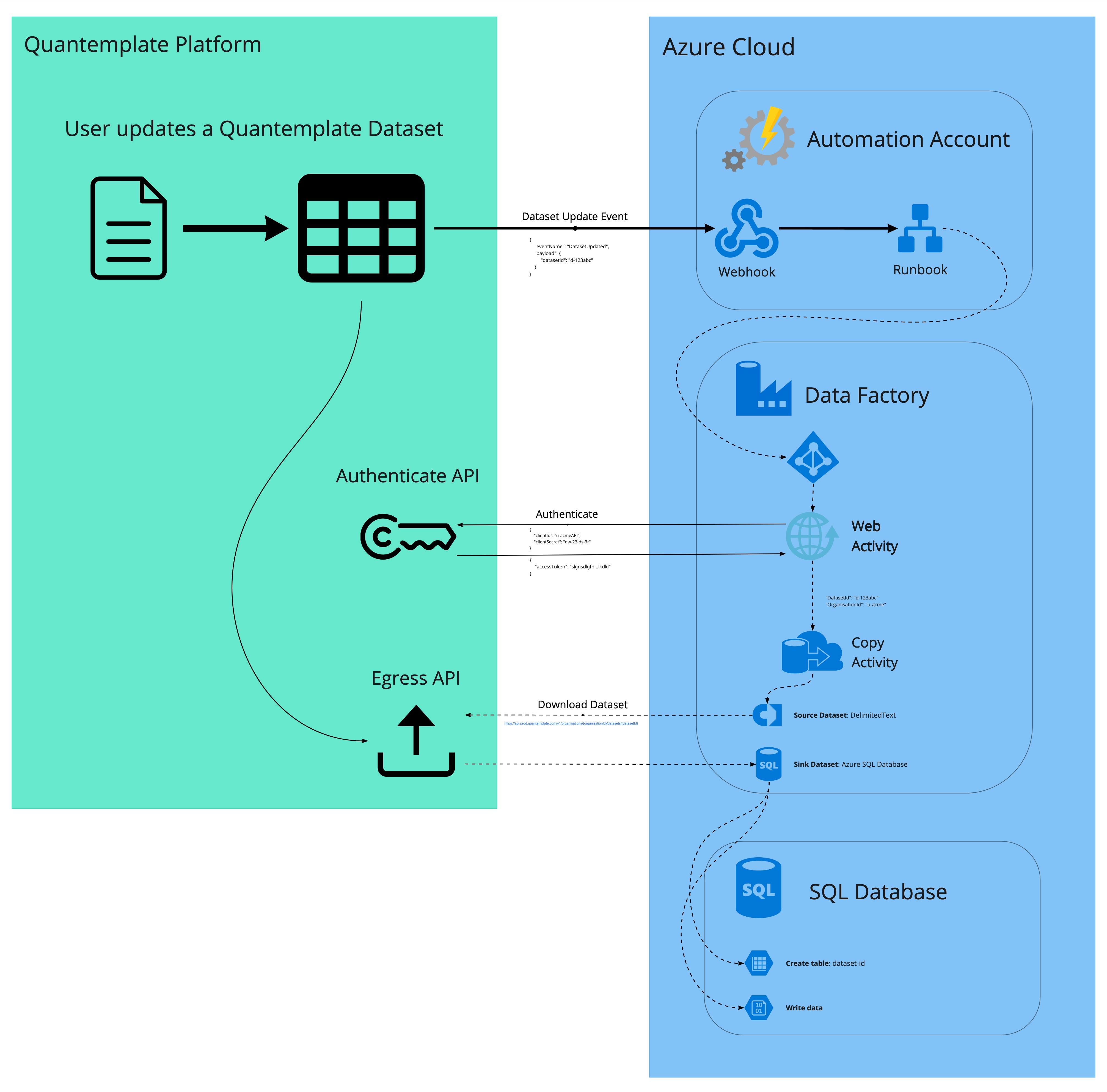

In this overview, we'll look at the building blocks required to use the Quantemplate API to listen for Dataset Updated events and then extract that dataset to a SQL table.

This video shows the end-to-end process and the remaining documentation will add some technical detail.

Receiving notifications from Quantemplate

- Follow this guide to create a Runbook and a Webhook

- Take the webhook URL and follow the notifications example to receive events from Quantemplate(Important note: subscriptions work only for the datasets shared with the whole organisation)

Events schema

Quantemplate subscription mechanism supports two events: DatasetUpdated and PipelineCompleted.

- DatasetUpdated:

The DatasetUpdated event is sent when a dataset shared with the organization is updated.

| Property | Type | Description |

|---|---|---|

id | String (UUID) | A unique identifier for the event, useful for tracking and referencing the specific event. |

name | String | The name of the event |

created | String (Date-Time) | The timestamp when the event was created, providing the exact time the event was generated. |

payload | Object | An object containing additional details about the event. |

payload.datasetId | String | The identifier of the dataset related to the event, indicating which dataset was updated. |

payload.eventName | String | The name of the event |

- PipelineCompleted:

The PipelineCompleted event is sent when the pipeline has been shared with the organization and the new pipeline run has been completed."

| Property | Type | Description |

|---|---|---|

id | String (UUID) | A unique identifier for the event, useful for tracking and referencing the specific event. |

name | String | The name of the event |

created | String (Date-Time) | The timestamp when the event was created, providing the exact time the event was generated. |

payload | Object | An object containing additional details about the event. |

payload.pipelineId | String | The identifier of the pipeline related to the event, indicating which pipeline was involved. |

payload.pipelineExecutionId | String | The identifier for the specific execution instance of the pipeline. |

payload.status | String | The status of the pipeline execution, indicating whether it succeeded, failed, etc. |

payload.eventName | String | The name of the event |

to filter out specific event we can add additional check into our script:

$object = $WebHookData.RequestBody | ConvertFrom-Json

$DatasetId = $object.payload.datasetId

$eventName = $object.name

if ($eventName -ne "DatasetUpdated")

{

Write-Output "Not supported event: $eventName"

return

}

else

{

continue with the script the event is DatasetUpdated

}Running a Data Factory Pipeline from a Runbook

Prerequisites:

- Example uses credentials from service principal created specifically for azure datafactory to authenticate from the automation script into datafactory instance(see Application and service principal objects in Microsoft Entra ID it is also possible to user other auth mechanisms e.g managed identity(see What are managed identities for Azure resources)

- We filter out

PipelineCompletedevent - Following automating variables are set (see Manage variables in Azure Automation):

- ADF-CredentialName

- ADF-ResourceGroupName

- ADF-DataFactoryName

- ADF-SubscriptionID

Additional note: Azure Modules user experience has been updated since the video was made. Azure support can revert you back to previous user experience, if needed.

Below is an example PowerShell script with inspiration from this guide:

param (

[object] $WebHookData

)

Write-Output "Starting run book"

if ($WebHookData){

# Extract the dataset ID from the payload

$object = $WebHookData.RequestBody | ConvertFrom-Json

$DatasetId = $object.payload.datasetId

$eventName = $object.name

} else {

Write-Output 'Not webhook'

$DatasetId = "not-defined"

}

if ($eventName -eq "DatasetUpdated")

{

$PipelineName = "QT_fetch_data"

$CheckLoopTime = 5

#Stop on any error!

$ErrorActionPreference = "Stop"

#Get and set configs

$CredentialName = Get-AutomationVariable -Name 'ADF-CredentialName'

$ResourceGroupName = Get-AutomationVariable -Name 'ADF-ResourceGroupName'

$DataFactoryName = Get-AutomationVariable -Name 'ADF-DataFactoryName'

$SubscriptionID = Get-AutomationVariable -Name 'ADF-SubscriptionID'

Write-Output "$CredentialName, $ResourceGroupName, $DataFactoryName, $SubscriptionID"

#Get credentials

$AzureDataFactoryUser = Get-AutomationPSCredential -Name $CredentialName

Write-Output "Credentials: $AzureDataFactoryUser"

try{

#Use credentials and choose subscription

Add-AzureRmAccount -Credential $AzureDataFactoryUser | Out-Null

Write-Output "Added Account"

Set-AzureRmContext -SubscriptionId $SubscriptionID | Out-Null

Write-Output "set context"

# Get data factory object

$df=Get-AzDataFactoryV2 -ResourceGroupName $ResourceGroupName -Name $DataFactoryName

#If exists - run it

If($df) {

Write-Output "Connected to data factory $DataFactoryName on $ResourceGroupName as $($AzureDataFactoryUser.UserName)"

"{""DatasetID"": ""$DatasetId""}" | Out-File -FilePath .\params.json

Get-Content -Path .\params.json

$RunID = Invoke-AzDataFactoryV2Pipeline -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineName $PipelineName -ParameterFile .\params.json

$RunInfo = Get-AzDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID

Write-Output "`nPipeline triggered!"

Write-Output "RunID: $($RunInfo.RunId)"

Write-Output "Started: $($RunInfo.RunStart)`n"

$sw = [system.diagnostics.stopwatch]::StartNew()

While (($Pipeline = Get-AzDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID |Select -ExpandProperty "Status") -eq "InProgress")

{

#Write-Output

$RunInfo = Get-AzDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID

Write-Output "`rLast status: $($RunInfo.Status) | Last updated: $($RunInfo.LastUpdated) | Running time: $($sw.Elapsed.ToString('dd\.hh\:mm\:ss'))" #-NoNewline

Start-Sleep $CheckLoopTime

}

$sw.Stop()

$RunInfo = Get-AzDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID

Write-Output "`nFinished running in $($sw.Elapsed.ToString('dd\.hh\:mm\:ss'))!"

Write-Output "Status:"

Write-Output $RunInfo.Status

if ($RunInfo.Status -ne "Succeeded"){

throw "There was an error with running pipeline: $($RunInfo.PipelineName). Returned message was:`n$($RunInfo.Message)"

}

}

}

Catch{

$errorMessage = $_.Exception.Message

$errorTimestamp = Get-Date -Format "yyyy-MM-dd HH:mm:ss"

$logMessage = "Error occurred at ${errorTimestamp}: ${errorMessage}"

Write-Output $logMessage

Throw $_

}

}

else

{

Write-Output "Not supported event: $eventName"

return

}Data Factory Copy Pipeline

In this example, the pipeline consists of two simple steps:

- A Web Activity to use the client-id and client-secret to authenticate with Quantemplate

- A Copy Activity to fetch the data from Quantemplate and to write that to a SQL DB instance

Prerequisite: Azure SQL DB InstanceFor this step you will require the SQL DB instance to have been created within your Azure account and for the Data Factory to have the required credentials to write to that instance.

This Azure guide details the steps required.

Login Web Activity

The configuration for this activity can be obtained from the Setting Up a Connection example.

URL: https://accounts.prod.quantemplate.com/auth/realms/qt/protocol/openid-connect/token

Method : POST

Header: Content-Type : application/x-www-form-urlencoded

Body: grant_type=client_credentials&client_id=@{variables('ClientID')}&client_secret=@{variables('ClientSecret')}

Copy data Activity

Source data from Quantemplate

This guide provides a good overview of the steps required to configure the Copy data Activity to fetch data from a service like Quantemplate.

The Download Dataset endpoint description defines the URL and parameters required.

Write data to SQL DB

This guide details how to configure the "Sink" part of the Copy Activity to write the data to the DB.

Updated 10 months ago